Bring expert ultrasound imaging and diagnostics to rural communities and other remote settings through our patent-pending mixed reality system without anyone needing to travel.

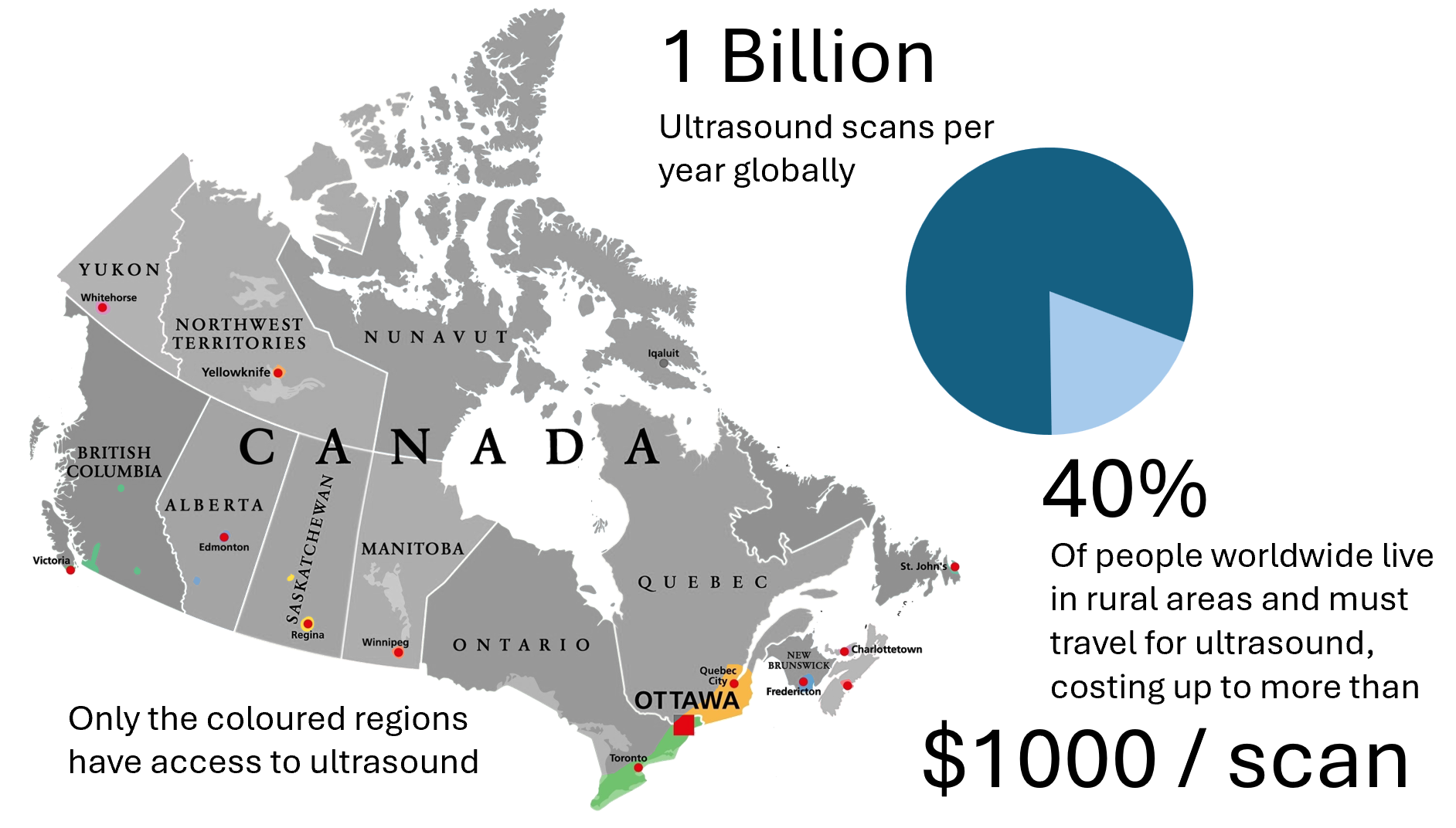

Diagnostic ultrasound is used in every branch of medicine, yet rural communities have little to no access. This impacts about 1 in 5 people globally. The only practical solution used today is for patients to travel for scans. This means time away from work and family, facing the risks of travel and the environmental impact, and it costs healthcare systems billions of dollars per year. The tele-ultrasound market is estimated to be worth $100 billion (USD), but current solutions do not solve the problem. Video guidance is too ineffective and imprecise while robotics is too complex, expensive, and inflexible.

Guide Medical is addressing the problem of ultrasound access by enabling expert ultrasound guidance in remote communities. Our system bridges the gap between robotics and video guidance, providing a solution that is simultaneously easy-to-use, low-cost, and flexible, while giving the physician precise control and immersive feedback.

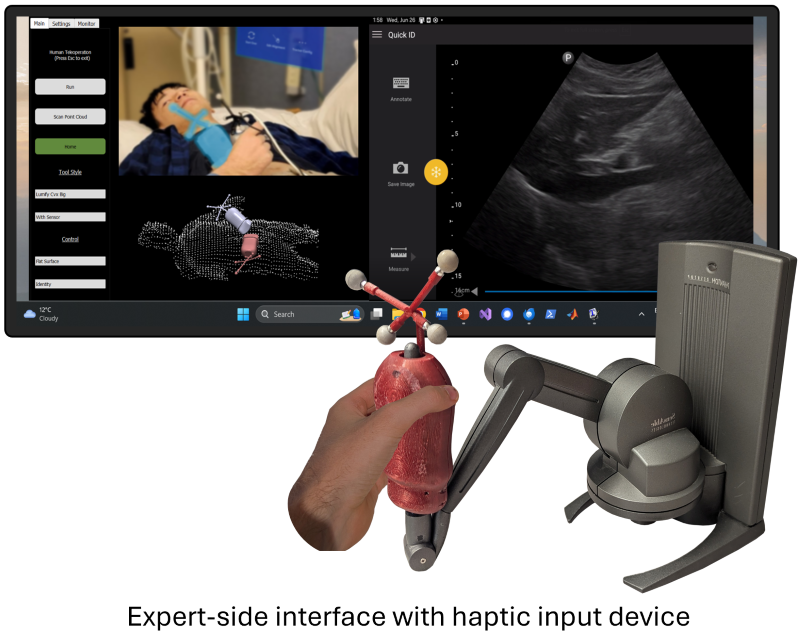

We use a patent-pending combination of real-time hand-over-hand augmented reality guidance with high-speed communication and force feedback to give the expert the sensation of performing a scan in person while in fact they are guiding the motions of a novice person in a remote location.

Our approach is based on hundreds of conversations with physicians, health authorities, remote and Indigenous communities, and industry experts, and has been validating in award-winning published research.

The novice person wears a mixed reality headset and aligns their real ultrasound probe with a virtual one. The motion of the virtual one is controlled in real time by a remote expert, who manipulates a haptic input device. They see the live-streamed video and ultrasound, communicate verbally with the novice, and receive force feedback through the haptic device so it feels as if they are performing the scan in person.

This venture was born in the Robotics and Control Laboratory at the University of British Columbia, Vancouver, Canada. We are carrying out extensive research on the technical system, instrumentation, control, human-computer interaction, vision, clinical feasibility, and more.

We are scientists and engineers with deep expertise in medical imaging, teleoperation, and mixed reality and a shared vision to improve health equity.

Interested in a pilot, research partnership, or otherwise getting involved? Let's talk.